tar cvjf compressed-shit.tar.bz2 /path/to/uncompressed/shit/

Only way to fly.

I’m curious about the contents in your compressed shit.

I stopped doing that because I found it painfully slow. And it was quicker to gzip and upload than to bzip2 and upload.

Of course, my hardware wasn’t quite as good back then. I also learned to stop adding ‘v’ flag because the bottleneck was actually stdout! (At least when extracting).

for the last 14 years of my career, I was using stupidly overpowered Oracle exadata systems exclusively, so “slow” meant 3 seconds instead of 1.

Now that I’m retired, I pretty much never need to compress anything.

If you download and extract the tarball as two separate steps instead of piping

curldirectly intotar xz(for gzip) /tar xj(for bz2) /tar xJ(for xz), are you even a Linux user?the problem is if the connection gets interrupted your progress is gone. you download to a file first and it gets interrupted, you just resume the download later

I download and then tar. Curl pipes are scary

They really, really aren’t. Let’s take a look at this command together:

curl -L [some url goes here] | tar -xzSorry the formatting’s a bit messy, Lemmy’s not having a good day today

This command will start to extract the tar file while it is being downloaded, saving both time (since you don’t have to wait for the entire file to finish downloading before you start the extraction) and disk space (since you don’t have to store the .tar file on disk, even temporarily).

Let’s break down what these scary-looking command line flags do. They aren’t so scary once you get used to them, though. We’re not scared of the command line. What are we, Windows users?

- curl -L – tells curl to follow 3XX redirects (which it does not do by default – if the URL you paste into cURL is a URL that redirects (GitHub release links famously do), and you don’t specify -L, it’ll spit out the HTML of the redirect page, which browsers never normally show)

- tar -x – eXtract the tar file (other tar “command” flags, of which you must specify exactly one, include -c for Creating a tar file, and -t for Testing a tar file (i.e. listing all of the filenames in it and making sure their checksums are okay))

- tar -z – tells tar that its input is gzip compressed (the default is not compressed at all, which with tar is an option) – you can also use -j for bzip2 and -J for xz

- tar -f which you may be familiar with but which we don’t use here – -f tells tar which file you want it to read from (or write to, if you’re creating a file). tar -xf somefile.tar will extract from somefile.tar. If you don’t specify -f at all, as we do here, tar will default to reading the file from stdin (or writing a tar file to stdout if you told it to create). tar -xf somefile.tar (or tar -xzf somefile.tar.gz if your file is gzipped) is exactly equivalent to cat somefile.tar.gz | tar -xz (or tar -xz < somefile.tar – why use cat to do something your shell has built-in?)

- tar -v which you may be familiar with but which we don’t use here – tells tar to print each filename as it extracts the file. If you want to do this, you can, but I’d recommend telling curl to shut up so it doesn’t mess up the terminal trying to show download progress also: curl -L --silent [your URL] | tar -xvz (or -xzv, tar doesn’t care about the order)

You may have noticed also that in the first command I showed, I didn’t put a - in front of the arguments to tar. This is because the tar command is so old that it takes its arguments BSD style, and will interpret its first argument as a set of flags regardless of whether there’s a dash in front of them or not. tar -xz and tar xz are exactly equivalent. tar does not care.

It’s not scary from the flags, but rather what is inside the tar/zip.

Thanks for the explanation, I might use more pipes now. Is it correct, that tar will restore the files in the tarball in the current directory?

I’m in this picture and I resent it.

Zip makes different tradeoffs. Its compression is basically the same as gz, but you wouldn’t know it from the file sizes.

Tar archives everything together, then compresses. The advantage is that there are more patterns available across all the files, so it can be compressed a lot more.

Zip compresses individual files, then archives. The individual files aren’t going to be compressed as much because they aren’t handling patterns between files. The advantages are that an error early in the file won’t propagate to all the other files after it, and you can read a file in the middle without decompressing everything before it.

Yeah that’s a rather important point that’s conveniently left out too often. I routinely extract individual files out of large archives. Pretty easy and quick with zip, painfully slow and inefficient with (most) tarballs.

Can you evaluate the directory tree of a tar without decompressing? Not sure if gzip/bzip2 preserve that.

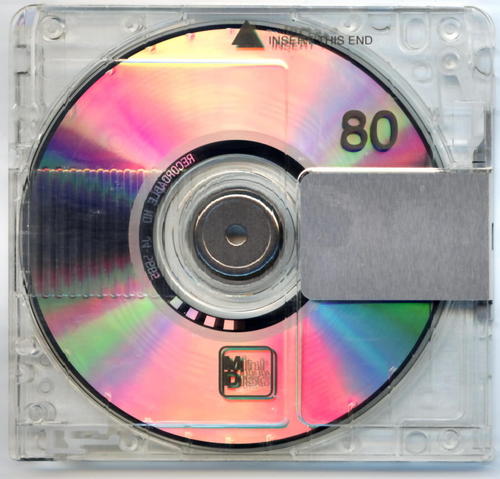

Nowhere in here do you cover bzip, the subject of this meme. And tar does not compress.

It’s just a different layer of compression. Better than gzip generally, but the tradeoffs are exactly the same.

Well, yes. But your original comment has inaccuracies due to those 2 points.

A tar directory also preserves file permissions. And can preserve groups/ownership if needed.

I use the command line every day, but can’t be bothered with all the compression options of tar and company.

zip -r thing.zip things/andunzip thing.zipare temptingly more straightforward.Need more compression?

zip -r -9 thing.zip things/. Need a faster option? Use a smaller digit.“yes i would love to

tar -xvjpfmy files”– statement dreamed up by the utterly insane

Present, I’m the

tar cvJfinsane

tar czf thing.tgz things/ tar xzf thing.tgzyes, and you still need zhe mnemonics

There’s gotta be a buncha tools that Clippy into the terminal to say “did you mean ____?” right? Including some new ones where they trained/fine-tuned a language model on man pages?

Interesting it’s not the most popular thing to use a GUI and use shortcuts for everything you want to do while still having the option to click through a menu or wizard for whatever you haven’t memorized. I suppose the power and speed of the command line are difficult to match if you introduce anything else, and if you spend time using a user interface that’s time you can’t spend honing your command line skills.

There’s

thefuck, but it hasn’t given me good suggestions.

Zip is fine (I prefer 7z), until you want to preserve attributes like ownership and read/write/execute rights.

Some zip programs support saving unix attributes, other - do not. So when you download a zip file from the internet - it’s always a gamble.

Tar + gzip/bz2/xz is more Linux-friendly in that regard.Also, zip compresses each file separately and then collects all of them in one archive.

Tar collects all the files first, then you compress the tarball into an archive, which is more efficient and produces smaller size.The problem with that is that it will not preserve flags and access rights.

.tar.gz, or.tgzif I’m in a hurry…or shipping to MSDOS

I still wonder what that’s like. Somebody must still occasionally get a notification that SOMEWHERE somebody paid for their WinRAR license and is like “WOAH WE GOT ANOTHER ONE!”

Never looked back since 7z though. :D

Me removing the plastic case of a 2.5’ sata ssd to make it physically smaller

That’s a big drive.

7z gang joined the chat…

all the cool kids use .cab

I mean xz/7z has kind of been the way for at least a decade now

Well, tar.zstd is starting to be the thing now.

First bundling everything in a tar file just to compress the thing in an individual step is kinda stupid, though. Everything takes much longer because of that. If you don’t need to preserve POSIX permissions, tar is pointless anyway.

Slower, yes. More compression, yes. Stupid, no. tar serves a purpose beyond persevering permissions.

Use an archiving format that does both at once then, preserving whatever tar use cases has and compressing. The two steps are stupid, no arguing against that.

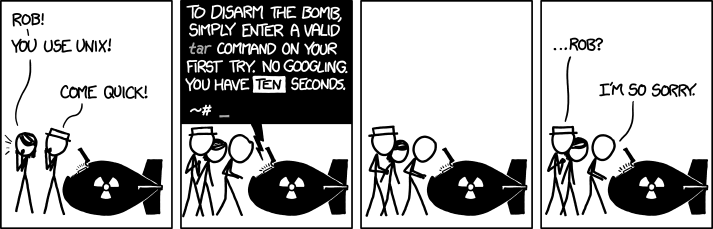

Oblig. XKCD:

tar eXtactZheVeckingFile

You don’t need the v, it just means verbose and lists the extracted files.

You don’t need the z, it auto detects the compression

That’s still kinda new. It didn’t always do that.

Per https://www.gnu.org/software/tar/, it’s been the case since 2004, so for about 19 and a half years…

Telling someone that they are Old with saying they are old…

Something something don’t cite the old magics something something I was there when it was written…

Right, but you have no way of telling what version of tar that bomb is running

Yeah, I just tell our Linux newbies

tar xf, as in “extract file”, and that seems to stick perfectly well.

You may not, but I need it. Data anxiety is real.

Me trying to decompress a .tar file

Joke’s on you, .tar isn’t compression

That’s not going to stop me from getting confused every time I try!

That’s yet another great joke that GNU ruined.

tar -hboom

tar -xzf

(read with German accent:) extract the files

Ixtrekt ze feils

German here and no shit - that is how I remember that since the first time someone made that comment

Same. Also German btw 😄

Not German but I remember the comment but not the right letters so I would have killed us all.

That’s so good I wish I needed to memorize the command

tar -uhhhmmmfuckfuckfuck

without looking, what’s the flag to push over ssh with compression

scp

man tar

you never said I can’t run a command before it.

The Fish shell shows me just the past command with tar

So I don’t need to remember strange flags

So I don’t need to remember strange flagsI use zsh and love the fish autocomplete so I use this:

https://github.com/zsh-users/zsh-autosuggestions

Also have

fzfforctrl + rto fuzzy find previous commands.I believe it comes with oh-my-zsh, just has to be enabled in plugins and itjustworks™

Mf’ers act like they forgot about zstandatd

This guy tar balls

I use .tar.gz in personal backups because it’s built in, and because its the command I could get custom subdirectory exclusion to work on.