That’s me with SNES emulators…

I only watch free tube in 12k.

Damn. I haven’t built a new computer in 10 years.

Are we seriously here now? DAAAAAAMN

I got old.

Not THAT far. But 32GB RAM is getting more common, though I haven’t heard of many people using 64, let alone more than that.

Also, storage is usually not that extreme, though multiple terabytes are also getting more common on the higher end.

Just this month, I upgraded to 16GB RAM and 1TB SSD so it isn’t that extreme for most people.

CPU and GPU - wise, most people aren’t running the best of the best, and there are still plenty of people using 10-series Nvidia GPUs, with most people seemingly using 20-series or 30-series GPUs, (and usually not the best cards for these generations)

I just now upgraded to 64GB of DDR4 from eBay. But that’s because my Blender projects were getting really big and I’d crashed a few times. Linux is awesome but is really scary how it just hard-freezed or mercilessly thrashes when low on memory. 😬

…That and I finally get glorious RGB RAM lighting up my case. :D weee!

For gaming? I haven’t seen that much RAM be usedul yet. And in Blender… likely because I have a crazy amount of undo levels enabled and have Firefox open…a few tabs…maybe more than a few tabs…maybe a lot of tabs…

really scary how it just hard-freezed or mercilessly thrashes when low on memory.

That’s what the swap partition is for?

Me neither. Obviously, this has to be used for hyper realistic VR interactive porn.

Someone who is not me wants to know how big of a difference would there be between like a 2070 super and a 4090 for some of that stuff?

They also want to know if there’s some more recent stuff they don’t know about yet.

One should keep bit rate in mind in order to experience a smoother experience. Or so I’ve read.

I’ve had my valve index for 5 years and never heard about bit rate affecting it - what am I looking for?

Hmm… Quality.

My guess is that you’ll get less diminishing returns if you focus on the haptical hardware, rather than the graphics.

The guy who is asking has some stuff in that department, he’s more interested in the visuals now

If he’s that desperate and has the money to spend, he’ll probably enjoy it. I’m not sure if I’d call that grade of… dedication to porn very… healthy.

YouTube says you’re a bot.

Time to watch PeerTube.

YouTube is usually the first thing I open on first boot of a new machine. That way I know if the sound is working, network is working and video drivers are ok all at once.

What do you check two hours later?

YouTube

And after that?

Redtube

it’s for the WINE overhead :)

Is 256gb RAM enough for YouTube?

Is a Bugatti enough for a short trip to the supermarket around the corner?

Is science fiction enough for talking about politics?

Politics in my sci-fi? Yuck.

Gonna go enjoy my politics-free shows like Star Trek, Star Wars, Black Mirror, Westworld, and The Man In the High Castle.

Popular scifi, written to appeal to the majority, for tv and movies, is actually only one special kind of scifi. And the majority isn’t known for its depth or taste.

I’m not saying that there aren’t exceptions, but ya, that’s how it is.

There’s a whole world of written scifi where the point is basically to show you something strange. Ideas so weird that scifi is the only way to convey them.

Here’s some free, online scifi by good authors. Your mind will be blown.

https://www.fimfiction.net/story/62074/friendship-is-optimal

https://library.gift/fiction/9F6810564C853BD3EA7223E4AB1FA575

Do you like movies about gladiators?

in chrome? no

A raspberry pi 5 can play YouTube in HD just fine, so if you wanna save 4000 bucks maybe do that instead

You can also just buy a used laptop or business computer which is infinitely better and cheaper.

Cheaper than a raspberry? O.o

By the time you factor in a case, cooling, SD card, and power supply a Pi can cost about as much (~$100) as an off lease i5.

Guess it depends on how much hardware you already got lying around. I could scrounge up an SD card, an old phone charger, a fan, keyboard and mouse from my supply and I’ve got a 3D printer.

I’m all for recycling though. I’m just not sure if a $100 i5 system could handle all that bloat on the web.

Also: I really like AV1. 😅

Have you had success with phone chargers? My RPi4 is very picky about power and is unstable on anything but the official power supply.

SD card, sure.

Old phone charger? Might not be strong enough to power a Pi 4/5; this isn’t the Pi 3 days with a microUSB.

“… and I’ve got a 3D printer.“

Yeah, you’re “ahead” of most people with that one.

This i5 could probably handle it, and you’ve got $$$ left over for an SSD.

Go with the 8500 i5 instead. I have that with a 1650 for the kids.

Agreed.

Was proving that you can find a half way decent machine that’s more capable than a Pi5 at the same price point (~$100)

If you have that lying around, you can still beat a pi 5 by only buying an i5 motherboard instead of an entire pc. As to handling the bloat, it’s faster.

Yep. First of all, the person who said an RPI5 can show YouTube HD just fine is lying. It’s still stuttery and drops frames (better than the RPI4b, but still not great). Second, you’ll end up dropping well north of $100 for the RPI5, active cooler, case, memory card (not even mentioning an m2 hat), power supply, and cable / adapter to feed standard HDMI.

You can find some really solid used laptops and towers in that price range, not to mention the n100 NUC. And they’ll all stream YouTube HD much better, as well as provide a much smoother desktop experience overall.

Don’t get me wrong, I love me a RPI, I run a couple myself. They’re just not great daily drivers, especially if you want to stream HD content.

I’m the person you’re accusing of lying. To your point, there are some dropped frames but that’s not a problem for me, and I figure most people wouldn’t notice 10 dropped frames out of every 1000, or whatever similar ratio it is. I have a rpi for a media PC and I’m happy with it. I play HD video in several web apps and only the shittiest of them (prime and paramount+) ever have a noticeable issue with playback.

People who complain about rpi’s being expensive kinda make me scratch my head. Like, do you not count the accessories you buy for other hardware? It seems the comparison is between the RPI and every single thing you buy for it, vs a laptop/PC itself with no accessories (which you will almost certainly be buying some amount of). I get that it sucks that these devices have gone up in price, but yeah, the accessories aren’t all that much more than any other device. You could have a very solid RPI setup for $120 all-in. And it would be more durable than some sketchy Acer laptop.

My Pi5 setup with a very fast SD card plays YouTube without dropping frames or stuttering at 1080p, that other guy is wrong. The UI is slow and a bit janky, but once YT is loaded and fullscreen, it plays perfectly. It plays 24/7 on a TV in our living room for my partner’s WFH and for our cats when they’re done.

Thank you for that validation! I actually just tested mine and saw the same results as you describe. I would drop about 30-50 frames going full screen and then only one here or there every few minutes. It is damned close to perfect.

Youtube on the RPI5 drops frames and is stuttery. If that’s fine for you, great. But I’d argue it’s not what people consider a good viewing experience. See https://www.youtube.com/watch?v=UBQosbjl9Jw&t=278s and https://youtu.be/nBtOEmUqASQ?si=VXFGVBid5wCrhu-u&t=797 if you’d like more info.

The accessories I mentioned for the RPI5 are the bare essentials just to get the thing to power up, boot to a web browser, and connect to a monitor to try to play YouTube, which is the foundation of your original comment. Please show me where a $120 used laptop or desktop tower needs additional hardware purchases to boot and plug in an HDMI cable.

You’re picking the wrong fight with the wrong guy, friend. I’m a huge RPI advocate and I think they are great tools for specific use cases. I simply want to point out that if folks are considering it in the hopes that it’s a small and cheap way to watch YouTube, they’re gonna have a bad time.

Pi 5 desktop kit is like $150 isn’t it?

Yeah you can beat that performance and price with some used hardware. Will cost more in power though.

Well, actually with 150$ you could buy a used business SFF/tiny PC with an 8th/9th gen i5 CPU and I don’t think that it will consume that much more than a rpi.

Only at idle.

At peak the sff PCs are going to be at least triple the ~30W of the pi 5.

Edit: You’ll get way more out of the sff though, which is what I was saying. Tiny/mini/micro is my entire self hosted environment (as well as lab and work setup for the most part).

At peak the sff PCs are going to be at least triple the ~30W of the pi 5.

Are you sure? I think that for the same tasks, the i5 (at least 9th gen) is more power efficient than the rpi 5. I was a pi guy, I had them all over the places, but like you, I’m now using SFF/tiny used PCs (when I don’t need GPIO).

At least as far as my setup, yeah. Ive got 5th-10th gens, under high loads I’ll see a spike to 80+ watts, the highest is 170W but those have nvidia quadros in them.

Edit: For gpio now I’ll just use an esp32 or something instead.

My only pi usage these days is work stuff, and orangepi is supported there. In terms of arm, also Jetson, but that’s kind of outside the discussion here.

You could get away with nothing but the Pi, depending on what you’ve got lying around.

Sure, depends on needs of course. Just saying I can see how someone could arrive at a better price point than a pi with more performance.

Just not more per watt (except in more burst demanding scenarios).

The pi foundation lost a lot of goodwill with me though, so I stick to the alternatives (orangepi for example) if I need one.

Edit: I a whole word.

oh man, I tried an orangepi and I cannot express how sketchy that thing was, top to bottom. It had a lot of power but that is the one good side it had (it was a lot more expensive than a rpi too). That shitty flashing utility alone make it worth picking something different.

I had so much trouble trying different OSes on it. I think actually none of them felt stable and I tried like 5 (multiple versions of each) I think.

Ive got very specific needs when it comes to pi-alikes, so I can only speak to how ive used it.

I still won’t support the pi foundation though.

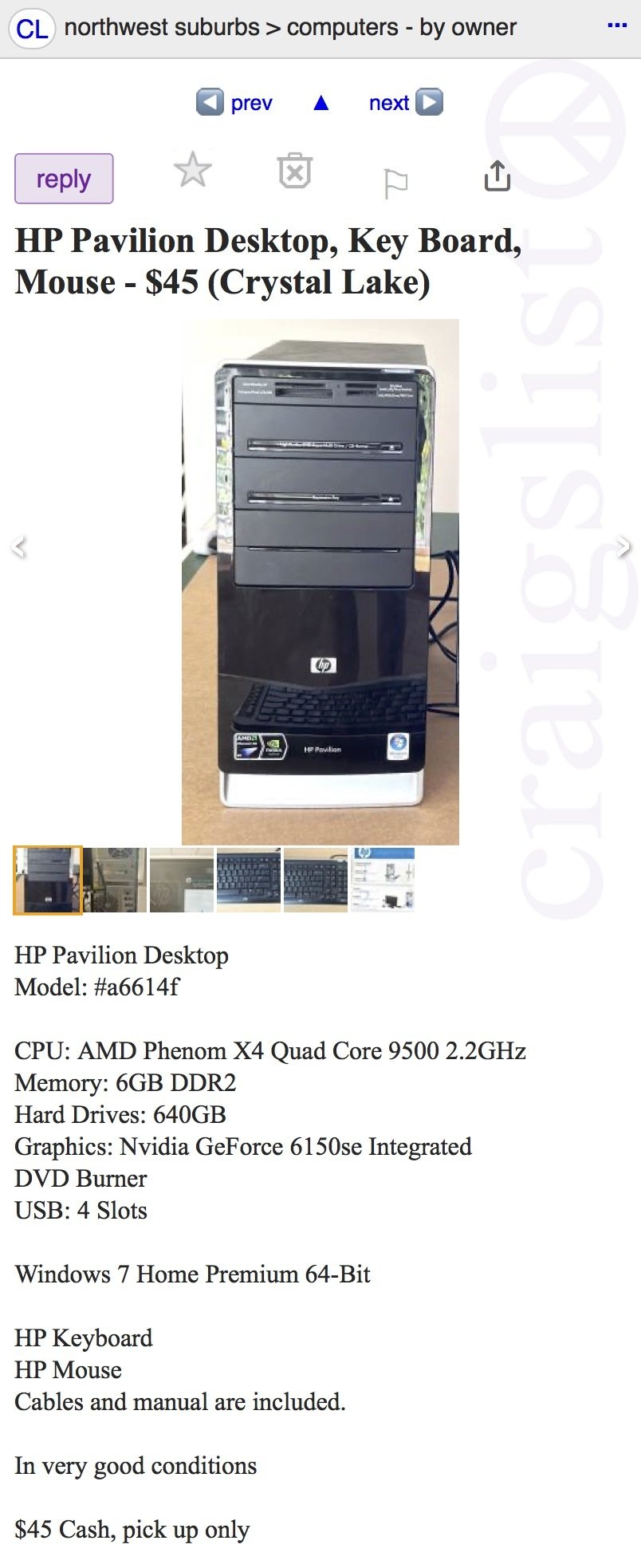

Yes. Here’s a random listing on CL.

Phenom X4? That thing probably can’t really handle YT HD streams.

HP

Kinda says it all.

Used stuff is generally cheaper than new stuff.

Yeah, but I wouldn’t be sure used stuff below 100€/$/whatever could handle the internet too well, nowadays.

Anything made in the past 10-15 years still works great, I have a couple of really old thin clients that I bought for around $20 and dumped my pis when the prices were way up. One runs octoprint and the other one runs Lubuntu out in the garage so I can look up vehicle specs and other things while I’m out there. I have a fifth Gen Intel laptop that still works great. I have a desktop with a Ryzen 3000 series that works just fine both bought used for under $100. Raspberry pi is good for certain tasks, but using it for a desktop makes little sense. Even now I’m working this message on an Android phone that was around $100 with no issues.

CPU power hasn’t changed much, they’ve added more features over the years, but power hasn’t changed a lot, only Windows has gotten more bloated so you need more ram to run it.

Yeah what i did is i got one of those dell thin client laptops. It runs great. I just open up parsec and can remote in to my server that has an i9 and 256gb ram with a 4090 and like 100tb hdd and 4tb nvme

Removed by mod

That was the joke.

I mean, yeah, I realize it was the joke. I think I was just adding context some people may not know about. I didn’t know a rpi could do that task until I started researching media PC options.

If there were not for youtube shitty war on adblocks I was able to watch youtube 1080p on a 30 bucks android tv thingy.

I would have to check is someone built an alternative app to keep watching it because power of the device was no issue. When running on a minimal kodi installation it just worked fine.

HHD+

Horny hard disk. Incidentally it’s infinitely better than HDD.

What, the Horny Droopy Dick?

41 tb?? What are you doing? Recording a lossless video of a 24/7 live stream?

Just another flight simmer, sounds like

I have about 60TB in mine. Media, man. Collecting is addictive.

Honestly feels like that’s necessary, with how much youtube jitters on my gaming rig. At least before I remember that YouTube runs like shit on every machine.

YouTube is by far the slowest website I visit, it’s so bloated.

Are you maybe using Windows? I’ve heard it can be slow sometimes, even on modern hardware.

Probably Firefox. Firefox handles video like shit.

Firefox doesn’t use hardware acceleration on Linux I think?

YouTube on Firefox is a complete no go as far as I’ve tried. And not sure how much Firefox is to blame since other video players work just fine on it.

But no, I’m using Opera or other chromium browsers for Youtube nowadays. Still jitters from time to time.

He heck is HHD+? Is this some new fangled storage tech I’m too SSD to understand?

As in “and” at least that’s how I interpret it.

What’s just HHD then?

Why would you buy a 25tb HDD. Have they never heard of RAID?

Hybrid Hard Drive

https://www.techtarget.com/searchstorage/definition/hybrid-hard-drive

Harder Hard Drive

I’m self hosting LLMs for family use (cause screw OpenAI and corporate, closed AI), and I am dying for more VRAM and RAM now.

Seriously looking at replacing my 7800X3D with Strix Halo when it comes out, maybe a 128GB board if they sell one. Or a 48GB Intel Arc if Intel is smart enough to sell that. And I would use every last megabyte, even if I had a 512GB board (which is the bare minimum to host Deepseek V3).

I know it’s a downvote earner on Lemmy but my 64gb M1 Max with its unified memory runs these large scale LLMs like a champ. My 4080 (which is ACHING for more VRAM) wishes it could. But when it comes to image generation, the 4080 smokes the Mac. The issue with image generation and VRAM size is you can think of the VRAM like an aperture, and the lesser VRAM closes off how much you can do in a single pass.

The issue with Macs is that Apple does price gouge for memory, your software stack is effectively limited to llama.cpp or MLX, and 70B class LLMs do start to chug, especially at high context.

Diffusion is kinda a different duck. It’s more compute heavy, yes, but the “generally accessible” software stack is also much less optimized for Macs than it is for transformers LLMs.

I view AMD Strix Halo as a solution to this, as its a big IGP with a wide memory bus like a Mac, but it can use the same CUDA software stacks that discrete GPUs use for that speed/feature advantage… albeit with some quirks. But I’m willing to put up with that if AMD doesn’t price gouge it.

Apple price gouges for memory, yes, but a 64gb theoretical 4090 would have cost as much in this market as the whole computer did. If you’re using it to its full capabilities then I think it’s one of the best values on the market. I just run the 20b models because they meet my needs (and in open webui I can combine a couple at that size), as I use the Mac for personal use also.

I’ll look into the Amd Strix though.

GDDR is actually super cheap! I think it would only be like another $75 on paper to double the 4090’s VRAM to 48GB (like they do for pro cards already).

Nvidia just doesn’t do it for market segmentation. AMD doesn’t do it for… honestly I have no idea why? They basically have no pro market to lose, the only explanation I can come up with is that their CEOs are colluding because they are cousins. And Intel doesn’t do it because they didn’t make a (consumer) GPU that was eally worth it until the B580.

Oh I didn’t mean “should cost $4000” just “would cost $4000”. I wish that the vram on video cards was modular, there’s so much ewaste generated by these bottlenecks.

Oh I didn’t mean “should cost $4000” just “would cost $4000”

Ah, yeah. Absolutely. The situation sucks though.

I wish that the vram on video cards was modular, there’s so much ewaste generated by these bottlenecks.

Not possible, the speeds are so high that GDDR physically has to be soldered. Future CPUs will be that way too, unfortunately. SO-DIMMs have already topped out at 5600, with tons of wasted power/voltage, and I believe desktop DIMMs are bumping against their limits too.

But look into CAMM modules and LPCAMMS. My hope is that we will get modular LPDDR5X-8533 on AMD Strix Halo boards.

Not running any LLMs, but I do a lot of mathematical modelling, and my 32 GB RAM, M1 Pro MacBook is compiling code and crunching numbers like an absolute champ! After about a year, most of my colleagues ditched their old laptops for a MacBook themselves after just noticing that my machine out-performed theirs every day, and that it saved me a bunch of time day-to-day.

Of course, be a bit careful when buying one: Apple cranks up the price like hell if you start specing out the machine a lot. Especially for RAM.

You can always uses system memory too. Not exactly an UMA, but close enough.

Or just use iGPU.

It fails whenever it exceeds the vram capacity, I’ve not been able to get it to spillover to the system.

You don’t want it to anyway, as “automatic” spillover with an LLM painfully slow.

The RAM/VRAM split is manually configurable in llama.cpp, but if you have at least 10GB VRAM, generally you want to keep the whole model within that.

Oh I meant for image generation on a 4080, with LLM work I have the 64gb of the Mac available.

Oh, 16GB should be plenty for SDXL.

For flux, I actually use a script that quantizes it down to 8 bit (not FP8, but true quantization with huggingface quanto), but I would also highly recommend checking this project out. It should fit everything in vram and be dramatically faster: https://github.com/mit-han-lab/nunchaku

I just run SD1.5 models, my process involves a lot of upscaling since things come out around 512 base size; I don’t really fuck with SDXL because generating at 1024 halves and halves again the number of images I can generate in any pass (and I have a lot of 1.5-based LORA models). I do really like SDXL’s general capabilities but I really rarely dip into that world (I feel like I locked in my process like 1.5 years ago and it works for me, don’t know what you kids are doing with your fancy pony diffusions 😃)

I don’t know how’s the pricing, but maybe it’s worth building a separate server with second-hand TPU. Used server CPUs and RAMs are apparently quite affordable in the US (assuming you live there) so maybe it’s the case for TPUs as well. And commercial GPUs/TPUs have more VRAM

second-hand TPU

From where? I keep a look out for used Gaudi/TPU setups, but they’re like impossible to find, and usually in huge full-server configs. I can’t find Xeon Max GPUs or CPUs either.

Also, Google’s software stack isn’t really accessible. TPUs are made for internal use at Google, not for resale.

You can find used AMD MI100s or MI210s, sometimes, but the go-to used server card is still the venerable Tesla P40.

Aren’t LLMs external algorithms at this point? As in the all data will not fit in RAM.

No, all the weights, all the “data” essentially has to be in RAM. If you “talk to” a LLM on your GPU, it is not making any calls to the internet, but making a pass through all the weights every time a word is generated.

There are system to augment the prompt with external data (RAG is one word for this), but fundamentally the system is closed.

Yeah, I’ve had decent results running the 7B/8B models, particularly the fine tuned ones for specific use cases. But as ya mentioned, they’re only really good in thier scope for a single prompt or maybe a few follow-ups. I’ve seen little improvement with the 13B/14B models and find them mostly not worth the performance hit.

Depends which 14B. Arcee’s 14B SuperNova Medius model (which is a Qwen 2.5 with some training distilled from larger models) is really incrtedible, but old Llama 2-based 13B models are awful.

I’ll try it out! It’s been a hot minute, and it seems like there are new options all the time.

Try a new quantization as well! Like an IQ4-M depending on the size of your GPU, or even better, an 4.5bpw exl2 with Q6 cache if you can manage to set up TabbyAPI.

If you “talk to” a LLM on your GPU, it is not making any calls to the internet,

No, I’m talking about https://en.m.wikipedia.org/wiki/External_memory_algorithm

Unrelated to RAGs

I’ve got a 3090, and I feel ya. Even 24 gigs is hitting the cap pretty often and slowing to a crawl once system ram starts being used.

You can’t let it overflow if you’re using LLMs on windows. There’s a toggle for it in the Nvidia settings, and get llama.cpp to offload though its settings (or better yet, use exllama instead).

But…. Yeah. Qwen 32B fits in 24GB perfectly, and it’s great, but 72B really feels like the intelligence tipping point where I can dump so many API models, and that barely won’t fit in 24GB.

Core i9 - Well there’s your problem.

No NVMe M.2s? What a noob! HDDs in this day and age!?!? Would you like a floppy disk with that?

4 slots of RAM? What is this, children’s playtime hour? You are only supposed to have 2 slots of RAM installed for optimum overclocking.

Does the dude even 8K 300fps ray trace antialias his YouTube videos!?!? I bet he caps out his Chrome tabs below a thousand.

HDD for long term storage. More reliable, has a higher number (essentially infinite assuming the drive never fails) of read/writes before failing. Cheaper and higher capacity than any ssd or m.2. Also if you dont keep applying a small electrical charge to an m.2 they eventually lose the data. HDD doesnt really lose data as easily. Also data recovery is easier with HDD. Finally you know when a HDD is on its way out as it will show slower write speeds and become noisier etc.

I used to work in a service desk looking after maybe… 4000 desktops and 2000 laptops for a hospital and the amount of ssd and m.2 failures we had was very costly.

I actually only installed M.2 a few years back when I went serious on my PC. I’m aware of the issues, although it’s still running good. I wonder how long it will last. I still have a few IDE drives, and some no longer can be read. Not because they’ve lost the data, but it just doesn’t spin up correctly. It will be interesting to see how it works out, at the moment I’m keeping an eye out on the health using CrystalDiskInfo. There’s certainly been cases of M.2 sticks with shitty firmware, but so far I seem to have avoided them. I’m also trying out a RAIDed M.2 mini NAS, it will be fun to see how that works out compared to the traditional NAS.

NVMe uses SSDs as well as flash memory. NVMe is just the protocol.

Although joking, I do tend to assume that people who say SSD refer to the traditional SATA SSD drives and not M.2.

I think they were saying that the read write speeds being from a NVMe would be faster than (an unspecified) SATA drive. But that was my assumption while reading

SATA SSDs are still more than fast enough to saturate a 2.5G ethernet connection. Some HDDs can even saturate 2.5G on large sequential reads and writes. The higher speed from M.2 NVMe drives isn’t very useful when they overheat and thermal throttle quickly. You need U.2 or EDSFF drives for sustained high speed transfers.

Exactly. NVMe for my gaming desktop, HDD and SATA SSD for my NAS.